The word Big Data has become omnipresent these days, and every firm, whether big or small, wants to harness its advantage. A lot of data is generated every second with the use of electronic devices all over the world. Many business decisions related to pricing, product features, and marketing can be taken by processing these Data sets. Data can be considered Digital Gold as it can be leveraged to gain several insights into Consumer Behavior.

There are many programming languages and softwares that can be used for processing data and generating reports in the form of tables, charts, or graphs. A careful study of these data representations can help find trends, patterns, and consumer preferences. Computer professionals who work on this data are called Data Scientists. R and Python are the two most popular programming languages used for Big Data and Machine Learning.

Technical Terms in Big Data World

Database

This term first came into existence in 1950s, and Relational Database became popular in the 1980s. Databases are used to monitor real-time structured data and have the most recent data available.

Data Warehouse

Data Warehouse is a model that helps inflow data from operational systems to decision systems. Businesses realized that data was coming from multiple places and needed a place where it could be analysed.

Data Lake

This term came into existence in the 2000s, and it referred to storing unstructured data in a more cost-effective way. Both Databases and Data Warehouses can store unstructured data, but they do not do it efficiently. With so much data generated, it becomes very expensive to store all the data. Along with the cost factor, there is a time and effort constraint. To counter these issues, data lake came to the forefront.

Big Data Characteristics

Volume

This refers to the size of the data that is generated and stored. The size of Big Data is generally in petabytes and terabytes.

Variety

This refers to the nature of data that is being collected and generated. Relational Database Management Systems(RDBMSs) were able to handle structured data very effectively. With the advent of semi-structured and unstructured data, RDBMS was not able to handle this type of data. As a result, Big Data technologies came into existence to analyze unstructured data. Big Data comprises data from text, images, audio, and video.

Velocity

The rate at which data is generated is called its Velocity, and in the case of Big Data, it is generated very quickly.

A Big Data Developer Should be Well Versed in the Following

1. Big Data Frameworks or Hadoop-based Technologies

Hadoop is a big name in Big Data, which was developed by Doug Cutting. The Hadoop framework stores data in a distributed manner and also performs parallel processing. Hadoop is also becoming the foundation for other Big Data technologies which are coming up. So, learning Hadoop should be a priority for any aspiring Big Data developer. Hadoop consists of different tools which can be used for various purposes.

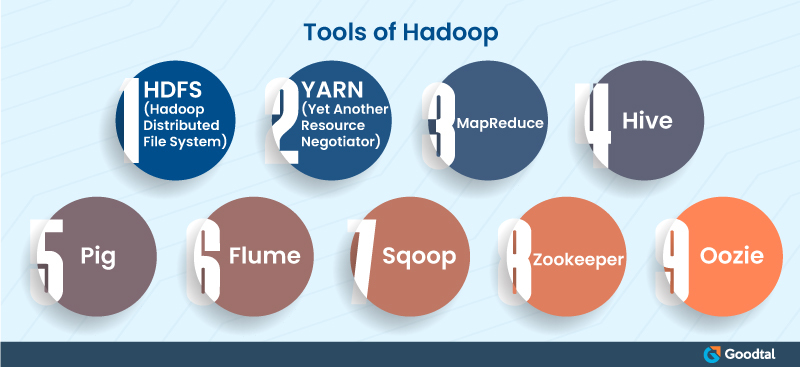

Tools of Hadoop

- HDFS (Hadoop Distributed File System)

HDFS is a storage layer of Hadoop that stores data in a commodity of hardware. An aspiring Big Data developer should begin by learning this core component of Hadoop.

- YARN (Yet Another Resource Negotiator)

YARN is responsible for managing resources within the Hadoop cluster, which are being used for running several applications. YARN also performs job scheduling, making Hadoop more flexible, scalable, and efficient.

- MapReduce

It is the heart of the Hadoop framework, which does parallel processing across clusters of inexpensive Hardware.

- Hive

A data warehousing tool that is open-source and built on top of Hadoop. Developers can perform queries on vast amounts of data stored in Hadoop HDFS.

- Pig

A high-level programming language that can be used for data transformation by researchers.

- Flume

Flume is used for importing large amounts of data, which are generated during events or log Data from different web servers to Hadoop HDFS.

- Sqoop

It is a tool used to import or export data from relational databases like MySQL, and Oracle to Hadoop HDFS or vice versa.

- Zookeeper

It acts as a coordinator amongst the distributed services running within the Hadoop cluster. A large cluster of machines is managed and coordinated by Zookeeper.

- Oozie

A workflow scheduler for managing various Hadoop jobs. It combines several tasks into a single unit and helps in completing the work.

2. Real-Time Processing Frameworks Such as Apache Spark

Real-time processing is the need of the hour, and Apache Spark can provide that capability. Real-time processing is required in applications like fraud-detection systems or recommendation systems. Apache Spark is a real-time distributed processing framework and thus the best choice for Big Data developers.

3. SQL

Structured Query Language is an old framework that can be used for storing, managing, and processing unstructured data stored in databases. The Big Data era began with SQL as the base; hence knowledge of SQL would be an added advantage.

4. NoSQL

SQL can only process structured data, but a lot of semi-structured and unstructured data is generated. NoSQL can process all three types of data, i.e., unstructured, semi-structured, and structured data. Some of the NoSQL databases are Cassandra, MongoDB, and HBase.

- Cassandra

Cassandra is a database that is very scalable and provides high availability without compromising performance. The database provides fast and random read/writes.

- HBase

It is a column-oriented database designed on top of Hadoop HDFS. HBase provides quick & random real-time read/write access to the data stored in the Hadoop File System. HBase provides Consistency, Availability, and Partitioning.

- MongoDB

It is a general-purpose document-oriented database. MongoDB also provides availability, scalability, and high performance. Consistency and Partitioning are also provided out of CAP.

5. Programming Language

Another requirement to be a Big Data developer is to be well versed in coding. Big Data developers should have knowledge of Data Structures, algorithms, and at least one programming language. Different languages have different syntax, although logic remains the same. For beginners, Python is the best option because of its simplicity and statistical nature.

6. Data Visualization Tools

Big Data developers need to interpret the data by visualizing it. Mathematical and scientific knowledge is required to understand and visualize the data. QlikView, QlikSense, and Tableau are some of the popular data visualization tools.

7. Data Mining

Data Mining is about extracting, storing, and processing vast amounts of data. Tools for Data Mining include Apache Mahout, Rapid Miner, KNIME, etc.

8. Machine Learning

This is the most popular field of Big Data as it helps in developing personalization, classification, and recommendation systems. Knowledge of Machine Learning algorithms is a necessity to become a successful Data Analyst.

9. Statistical & Quantitative Analysis

Big data is all about numbers, and quantitative and statistical analysis is the most crucial part of Big Data analysis. Statistics concepts like probability distribution, correlation, and binomial distribution should be clear. Statistical software like R & SPSS should also be learned as they help in analyzing data.

10. Creativity & Problem-Solving Ability

Big Data Developers need to analyze data and come up with solutions for the questions under consideration. As a result, problem-solving ability and creativity are also required to be a successful Big Data Developer.

So, to Cut Big Data Small…

The pace of data creation is more than 2.5 quintillion bytes every day, which is a staggering amount of data. This poses a challenge to interpreting and making sense of all this information. Big Data developers are the only professionals who can help with analyzing this data.

“The global Big Data market is estimated to grow to USD 103 billion by 2027” - Statista

Customers want responses in real-time, so the critical factor here is speed and there is no point in having insights after the customer has walked out of the door. Big Data analysis may also help improve core operations to create new avenues for business. So, if your business generates a huge amount of data and you want to use it to make logical & scientific decisions, hire talented Big Data developers recommended on Goodtal.